Modern Web Development Trends in 2024

The web development landscape continues to evolve rapidly. Here are the key trends shaping modern web development in 2024.

The web development landscape continues to evolve rapidly. Here are the key trends shaping modern web development in 2024.

Docusaurus is a powerful static site generator that makes it easy to create documentation sites and blogs. In this post, we'll explore how to set up your first Docusaurus blog and customize it to match your brand.

Sessions is very important and quite critical for the operation of ZooKeeper. All operations a client submits to ZooKeeper are associated to a session. When a session ends for any reason, the ephemeral nodes created during that session disappear.

The client initially connects to any server in the ensemble, and only to a single server. It uses a TCP connection to communicate with the server, but the session may be moved to a different server if the client has not heard from its current server for some time. Moving a session to a different server is handled transparently by the ZooKeeper client library.

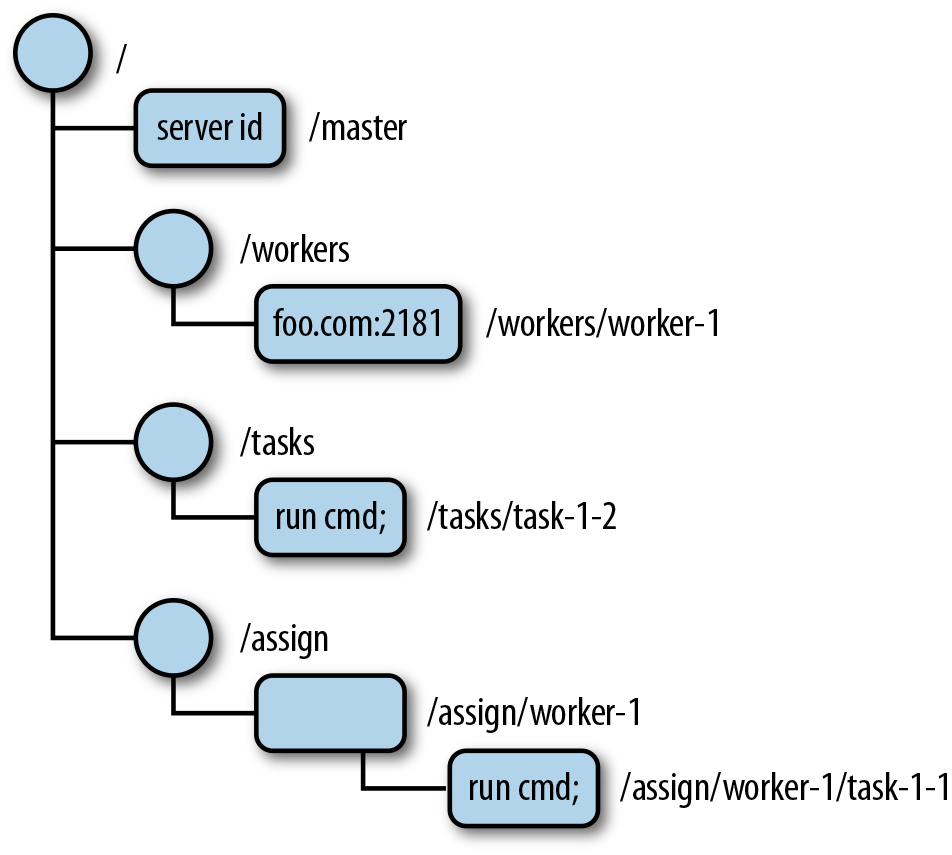

ZooKeeper has a hierarchal name space(as shown below), much like a distributed file system. The only difference is that each node in the namespace can have data associated with it as well as children. It is like having a file system that allows a file to also be a directory.

Zookeper is an open-source, centralised co-ordination service which is used to co-ordinate the services and manage the configurations of applications accross a large number of hosts over a distributed environment.

Co-ordinating between the services in a distributed application is a complex process. ZooKeeper was designed to be a robust service that enables application developers to focus mainly on their application logic rather than coordination. It exposes a simple API, similar to filesystem API, that allows developers to implement common co‐ordination tasks, such as electing a master server,managing group membership, and managing metadata.

Zookeper is open-sorced to Apache by Yahoo. Apache Zookeper have became standard for organising the services in Hadoop, kafka and other distributed frameworks.

Message passing is a technique to enable inter-process communication (IPC), or for inter-thread communication within the same process communication between two distributed or non-distributed parallel processes in synchronous or asynchronous mode, The communications are completed by the sending of messages (functions, signals and data packets) to recipients.

Normalisation is the process of eliminating the redundancy, minimising the use of null values and prevention of the loss of information by establishing relations and ensuring data integrity.

Data should only be stored once and avoid storing data that can be calculated from other data already held in the database. During the process of normalisation redundancy must be removed, but not at the expense of breaking data integrity rules.

The removal of redundancy helps to prevent insertion, deletion, and update errors, since the data is only available in one attribute of one table in the database.

HashMap doesn't preserve any order by default. If order is required we need to sort it explicitly according to the requirement.

In this article I have tried to explain how to sort a HasMap based on values.

A Linked List is a dynamic data structure. The number of nodes in a list is not fixed and can grow and shrink on demand. Any application which has to deal with an unknown number of objects will need to use a linked list.

Linked lists and arrays are similar since they both store collections of data. The terminology is that arrays and linked lists store "elements" on behalf of "client" code. The specific type of element is not important since essentially the same structure works to store elements of any type. The size of the array is fixed where Linked List is a dynamic and also Inserting new elements at the front or in middle of an array is potentially expensive because existing elements need to be shifted over to make room.

MapReduce is a framework for processing large amount of data residing on hundreds of computers, its an extraordinarily powerful paradigm. MapReduce was first introduced by Google in 2004 MapReduce: Simplified Data Processing on Large Clusters.

In this article we'll see how MapReduce processes the data, I am considering the Word Count program as a example, yeah!! this is the worlds most famous MapReduce program!!